TL;DR

This blog presents comprehensive experimental findings on optimizing Vision Language Models (VLMs) for object detection through reinforcement learning, focusing on Qwen2.5-VL-3B and 7B models. Key findings include:

- Reward Engineering Breakthroughs:

- Weighted sum with cosine reward achieved best performance (+1.8% mAP over baseline AP rewards)

- Novel repetition penalty helped reduce redundant detections while maintaining high precision (77.08%)

- Model Size Impact:

- 7B models showed stronger initial format adherence (0.75 vs 0.4 format score)

- 3B models demonstrated more potential for improvement through prompt engineering

- Training Data Insights:

- Pure Object Detection outperformed mixed approaches (+2.1% mAP over mixed training)

- Small object specialized training improved precision (+16.37%) with minimal recall trade-off

The findings provide practical guidelines for VLM training optimization, particularly highlighting the importance of reward engineering and model-specific prompt strategies. Results demonstrate that careful tuning of these elements can significantly enhance object detection performance while maintaining model efficiency.

Introduction

This blog post summarizes our key findings and insights from extensive experimentation with Reinforcement Learning (RL) for object detection in Vision Language Models (VLMs), specifically focusing on Qwen2.5-VL-3B and 7B. Our experiments covered various aspects including training methodologies, data preparation strategies, reward functions, and prompt engineering.

1. Training Options Overview

1.1 Training Datasets

- COCO Dataset: Standard object detection dataset with 80 categories

- OVDEval Dataset: Object Vision Detection Evaluation dataset https://github.com/om-ai-lab/OVDEval

- D³ Dataset: Diverse object detection dataset https://github.com/shikras/d-cube

1.2 Evaluation Datasets

- COCO_filtered (COCO_pos_1): Categories with fewer than 10 annotation boxes

- COCO_pos_2: Images where each category has only one bounding box

- COCO_pos_3: Images where total box count across categories is under 10

- REFCOCO: Reference expression comprehension benchmark (in-domain)

- REFGTA: Reference expression comprehension benchmark (out-of-domain)

- OVDEval: Test set for comprehensive object detection capabilities

1.3 RL Reward Options

- AP50: Average Precision at IoU threshold of 0.5

- AP: Average Precision across multiple IoU thresholds

- Weighted_sum: Combination of position accuracy and detection completeness

- Cosine reward: Promotes response efficiency

- Repetition reward: Penalizes repetitive patterns in outputs

1.4 Training Methods

- Supervised Fine-Tuning (SFT): Direct learning from human annotations

- Reinforcement Learning (RL): Learning from reward signals

- Baseline Models: Qwen2.5-VL-3B and Qwen2.5-VL-7B

1.5 Prompt Formats

- Standard prompt: "Output the thinking process in <think> </think> and final answer in <answer> </answer> tags."

- Enhanced prompt: "First thinks about the reasoning process in the mind and then provides the user with the answer. The reasoning process and answer are enclosed within <think> </think> and <answer> </answer> tags, respectively, i.e., <think> reasoning process here </think><answer> answer here </answer>"

- System prompt variations: Alternative configurations for model instruction

2. Evaluation Datasets Options

2.1 COCO Dataset Evaluation Modes

COCO_filtered (COCO_pos_1) Dataset

The COCO_filtered dataset is created from the COCO dataset's instances_val2017.json file. It filters out categories with more than 10 annotation boxes, ensuring that only categories with fewer boxes are included.

COCO_pos_2 Dataset

The COCO_pos_2 dataset focuses on images where each category has only one bounding box.

COCO_pos_3 Dataset

The COCO_pos_3 dataset is also derived from the COCO dataset's instances_val2017.json file. It ensures that the total number of boxes across all categories does not exceed 10.

In the evaluation of the COCO dataset, we have 3 modes:

- All mode: Includes all 80 categories in COCO

- Positive mode: Includes only categories present in the current image

- 1bbox per category mode: Includes only one bounding box per category

2.2 OVD Dataset Evaluation Modes

- All mode: Combines both positive and negative prompts

- Positive mode: Includes only categories present in the image

3. Training Dataset Findings

3.1 Small Object Detection Trade-offs

When training with MAP reward on small objects (area < 0.5% of image) vs. regular single-box data:

| Training Data | COCO_filtered (mAP) | Precision (IoU=0.5) | Recall (IoU=0.5) |

|---|---|---|---|

| Small objects only | 22.3 | 71.39 | 43.36 |

| Regular single-box | 21.1 | 55.02 | 49.46 |

Key findings:

- Small object training improves precision (+16.37 points)

- Slight decrease in recall (-6.1 points)

- Consider balanced datasets unless small object detection is a primary goal

3.2 Single Region Label Impact

The descriptions of Single Region Label and OD (Object Detection):

Single Region Label:

The Pure Single Region method takes a different approach. Instead of detecting the object and its boundaries, it relies on a given coordinate and asks the model to classify which object from a list of predefined options is located within the provided coordinates. Essentially, this is akin to a multiple-choice question, where the model is given a location (a coordinate box) in the image and must choose the correct object category from a set of possible options. This method emphasizes classification based on specific coordinates, and matches the region to the appropriate object class.

OD (Object Detection):

The OD approach focuses on detecting the position and boundaries of an object within an image. The model identifies the object and its location by drawing bounding boxes, and performance is measured using metrics like mAP and IoU. OD not only classifies the object but also determines its position in the image.

Comparison of different training approaches:

| Training Approach | COCO_filtered (mAP) | Precision (IoU=0.5) | Recall (IoU=0.5) |

|---|---|---|---|

| Pure Single Region | 18.4 | 66.01 | 34.57 |

| Mixed OD + Single Region | 21.7 | 63.98 | 46.75 |

| Pure OD (Single class) | 23.8 | 65.58 | 48.92 |

Key findings:

- Pure Object Detection (OD) outperforms mixed approaches

- Mixed datasets outperform pure single region training

- Including Single Region Label data may hinder object detection performance

4. RL Reward Function Optimization

4.1 Comparison of Different Reward Functions

| Training data | Reward method | COCO_pos_3 (mAP) | Precision (IoU=0.5) | Recall (IoU=0.5) |

|---|---|---|---|---|

| OVDEval | AP50 | 27.1 | 70.17 | 58.05 |

| OVDEval | AP | 27.4 | 71.11 | 57.86 |

| OVDEval | weighted_sum | 27.5 | 71.32 | 57.72 |

| OVDEval | weighted_sum, cosine reward | 29.3 | 76.95 | 59.11 |

| OVDEval | weighted_sum, cosine reward, repetition reward | 28.8 | 77.08 | 57.98 |

1. AP or AP50 For Bounding Box:

In our experiments, we utilized ovdeval as the training dataset and COCO_pos_3 for validation. The average precision (AP) score improved from 27.1 to 27.4. This single comparison highlighted that AP consistently outperforms AP50. The rationale behind this is that AP50, with its binary thresholding, offers limited feedback, whereas AP provides a more granular, continuous spectrum of feedback. This continuous feedback mechanism enables the model to refine its bounding box predictions, leading to enhanced precision and overall model performance.

2. Reward rule design

During our exploration, we considered implementing a customized rule for the object detection task. The focus is primarily on two aspects: the spatial overlap between predicted and ground truth boxes, and the overall detection completeness. Spatial overlap is typically measured using the Intersection over Union (IoU), which is the ratio of the area of overlap to the area of union between two boxes. A higher IoU indicates that the predicted box is closer in spatial position to the ground truth box. In terms of detection completeness, it is important to consider both missed detections and false alarms. Missed detections refer to real targets that were not detected, while false alarms refer to non-target areas that were incorrectly detected. Completeness is assessed by calculating the miss rate and false alarm rate, with the completeness score computed as

completeness = 1 - (miss rate + false alarm rate)/2

This reflects the overall thoroughness of the detection. To form a comprehensive score, the function combines position accuracy and detection completeness through a weighted average. When matching predicted and ground truth boxes, a greedy matching strategy is employed, which involves iteratively selecting the pair of boxes with the highest IoU until no more satisfactory matches can be made. It is important to note that if the predicted label does not match the ground truth label, the IoU is set to 0, even if the IoU itself is high, affecting the final score. This multi-dimensional reward method, which is named as weighted_sum, provides a comprehensive metric to reflect the performance of object detection models. We implemented this reward metric into training and compared it to the AP reward. The results show that the weighted_sum reward can further boost the mAP score to 27.5.

3. Cosine reward and repetition reward

Inspired by the paper "Demystifying Long Chain-of-Thought Reasoning in LLMs" (https://arxiv.org/abs/2502.03373), we implemented cosine and repetition rewards in our experiments. The cosine reward encourages a more efficient reasoning process by promoting shorter responses when a correct answer has already been reached. Conversely, for incorrect answers, the cosine reward incentivizes further exploration until the correct answer is found. This approach helps to converge the response length to a reasonable size while avoiding unnecessary reasoning.

In addition, we implemented a repetition reward to penalize n-gram patterns, as we observed instances of repetition when generating responses. Given that our focus is on the Object Vision Detection (OVD) task, it is likely that multiple objects are present within a single image. Therefore, we parse the OVD output JSON and calculate the n-grams based on the elements contained within it.

We found that the implementation of cosine and repetition rewards can converge to the same reasoning process during single training sessions. However, different training attempts may lead to the convergence of distinct reasoning patterns. Below, we present several interesting reasoning patterns observed during our experiments.

problem:

Please carefully observe the image and detect the following targets: plastic chair.Output each detected targets' bbox coordinates in JSON format. The format of the bbox coordinates is: ```json

[{"bbox_2d": [x1, y1, x2, y2], "label": "target name"}, {"bbox_2d": [x1, y1, x2, y2], "label": "target name"}]

```. If there are no such targets in the image, simply respond with None.

Content: <think>

4

</think>

<answer>

```json

[

{"bbox_2d": [38, 95, 113, 164], "label": "plastic chair"},

{"bbox_2d": [130, 90, 177, 150], "label": "plastic chair"},

{"bbox_2d": [174, 89, 222, 140], "label": "plastic chair"},

{"bbox_2d": [222, 86, 258, 135], "label": "plastic chair"}

]

```

</answer>problem:

Please carefully observe the image and detect the following targets: iron door.Output each detected targets' bbox coordinates in JSON format. The format of the bbox coordinates is: ```json

[{"bbox_2d": [x1, y1, x2, y2], "label": "target name"}, {"bbox_2d": [x1, y1, x2, y2], "label": "target name"}]

```. If there are no such targets in the image, simply respond with None.

Content: <think>

image.

</think>

<answer>

```json

[

{"bbox_2d": [97, 91, 406, 718], "label": "iron door"}

]

```

</answer>problem: Please carefully check the image and detect the following objects: ['a fisherman standing on the shore with his body soaked in water', 'a street food truck without an umbrella', 'person covered with armor', 'woodpecker on the side of the trunk', 'unused microphone stand on stage']. Output the bbox coordinates of detected objects in <answer></answer>. The bbox coordinates in Markdown format should be:

```json

[{"bbox_2d": [x1, y1, x2, y2], "label": "object name"}]

```

If no targets are detected in the image, simply respond with "None".

Content: <think>

I carefully analyzed the image to locate specific objects.

</think>

<answer>

```json

[{"bbox_2d": [294, 221, 542, 442], "label": "woodpecker on the side of the trunk"}]

```

</answer>When we introduce the cosine reward, we noticed a notable improvement in performance, with the mAP rising to 29.3. This increase is accompanied by a significant boost in precision, which reaches 76.95, and a slight improvement in recall to 59.11. Furthermore, when we add the repetition reward to the weighted sum and cosine reward combination, the mAP slightly decreases to 28.8. While the precision remains high at 77.08, the Recall drops to 57.98. This suggests that while the repetition reward may help in reducing redundancy in predictions, it could also lead to a trade-off where the model becomes overly conservative, potentially missing out on capturing some relevant instances. The overall results indicate that the addition of cosine rewards significantly enhances the model's performance, particularly in terms of precision.

5. Prompt Engineering Findings

We have made a series of experiments on the 3B model with OVDEval with the following discoveries.

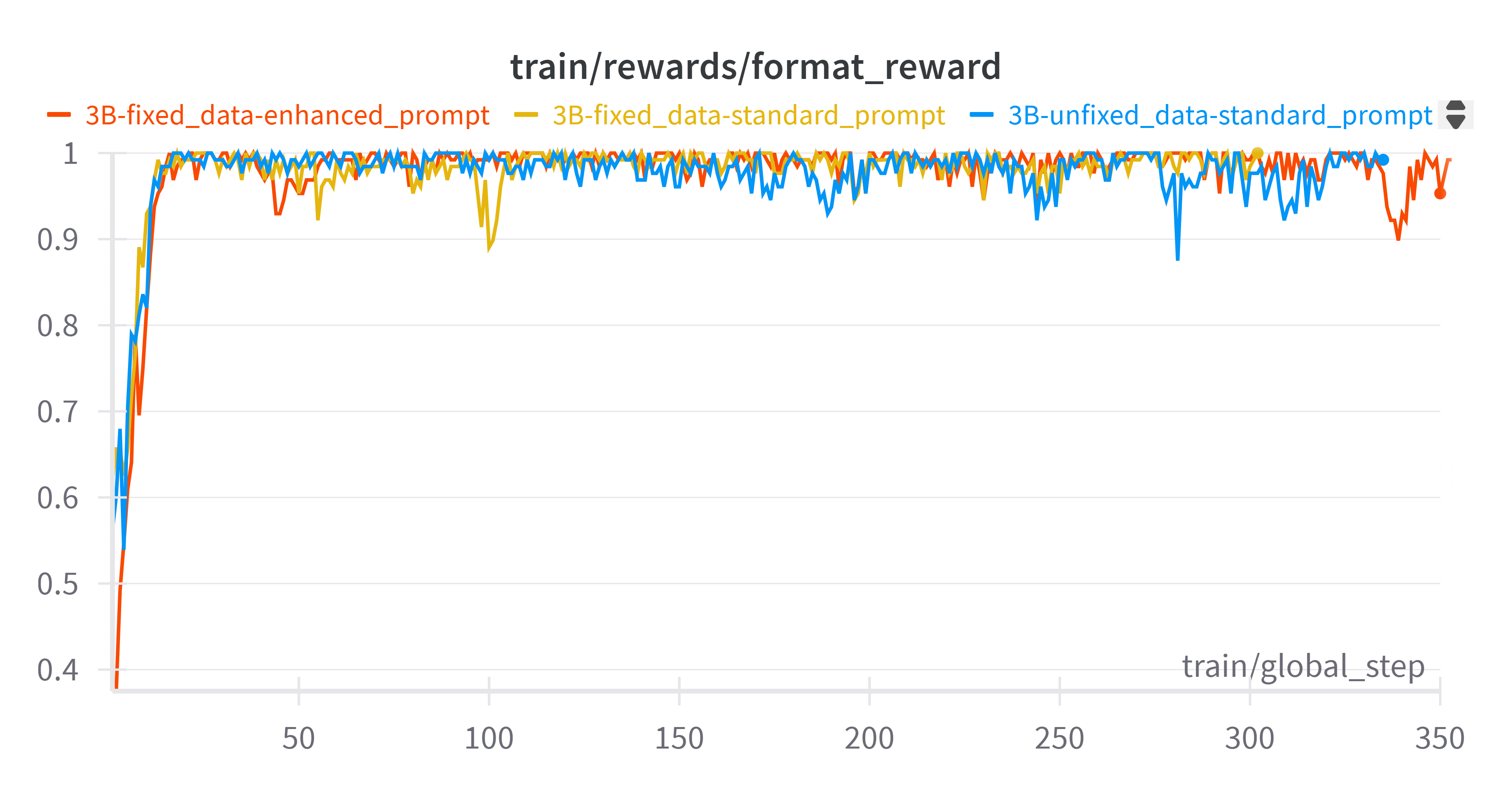

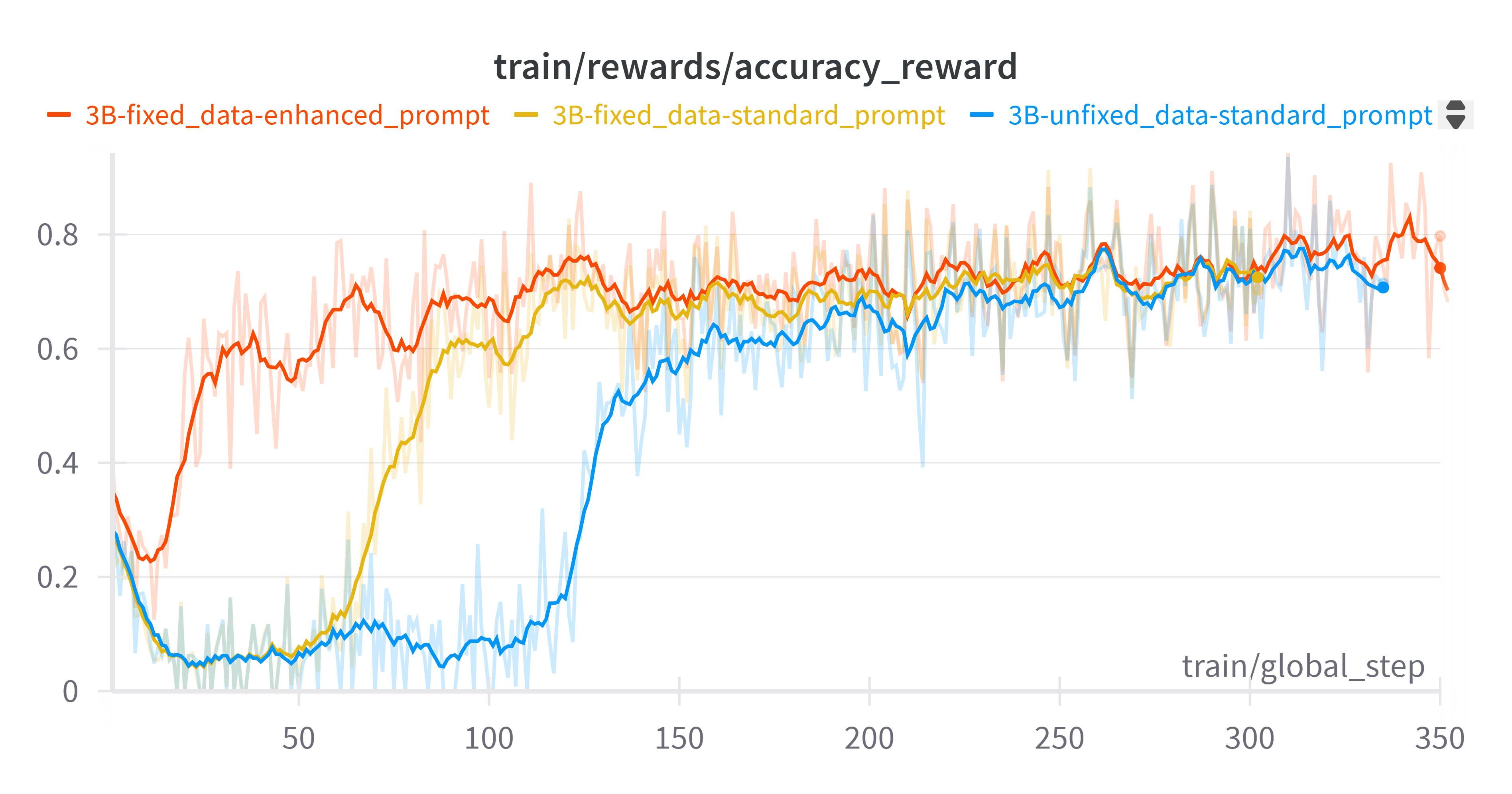

Format rewards on 3B model during training

Accuracy rewards on 3B model during training

5.1 Impact of Minor Grammatical Issues

Even small grammatical issues (like a missing space) significantly affected training convergence and on 3B model.

At the beginning of training on the 3B model using the OVDEval data (using data with grammatical problems and standard prompt). We observed that accuracy reward did not rise as the format reward finished converging (around 15 steps), but begin to up until around 125 steps. After checking the training parameter configurations, we found that the dataset had a problem of missing spaces, we fixed this problem and tried training again. It was found that the model did speed up convergence rising at around 65 steps. However, while making the accuracy reward of the training start to rise faster, eventually converges to the same value (around 0.7).

Example of problematic data:

Missing space data

<image>\n Please carefully observe the image and detect the following targets: person sit on motorcycle; motorcycle is sat on by person.Output each detected targets' bbox coordinates in JSON format. The format of the bbox coordinates is: \`\`\`json\n\[{\"bbox\_2d\": \[x1, y1, x2, y2\], \"label\": \"target name\"}, {\"bbox\_2d\": \[x1, y1, x2, y2\], \"label\": \"target name\"}\]\n\`\`\`. If there are no such targets in the image, simply respond with None.Fixed data

<image>\n Please carefully observe the image and detect the following targets: person sit on motorcycle; motorcycle is sat on by person. Output each detected targets' bbox coordinates in JSON format. The format of the bbox coordinates is: \`\`\`json\n\[{\"bbox\_2d\": \[x1, y1, x2, y2\], \"label\": \"target name\"}, {\"bbox\_2d\": \[x1, y1, x2, y2\], \"label\": \"target name\"}\]\n\`\`\`. If there are no such targets in the image, simply respond with None.5.2 Prompt Structure Optimization

Prompt modifications can improve the efficiency of training convergence on 3B model.

Although the accuracy reward can start to converge faster (around 65 steps) after correcting the data, it still did not match the trend of the format reward (around 15 steps). We then tried to modify the prompt without changing the other training settings, and finally the accuracy reward of the training could rise immediately after the format reward finished converging (around 15 steps). The modification of the prompt still did not improve the final accuracy reward value (around 0.7) although speed of convergence increase again during training

Enhanced prompt

"First thinks about the reasoning process in the mind and then provides the user with the answer. The reasoning process and answer are enclosed within <think> </think> and <answer> </answer> tags, respectively, i.e., <think> reasoning process here </think><answer> answer here </answer>"5.3 Different Robustness of 7B and 3B Model to Prompt

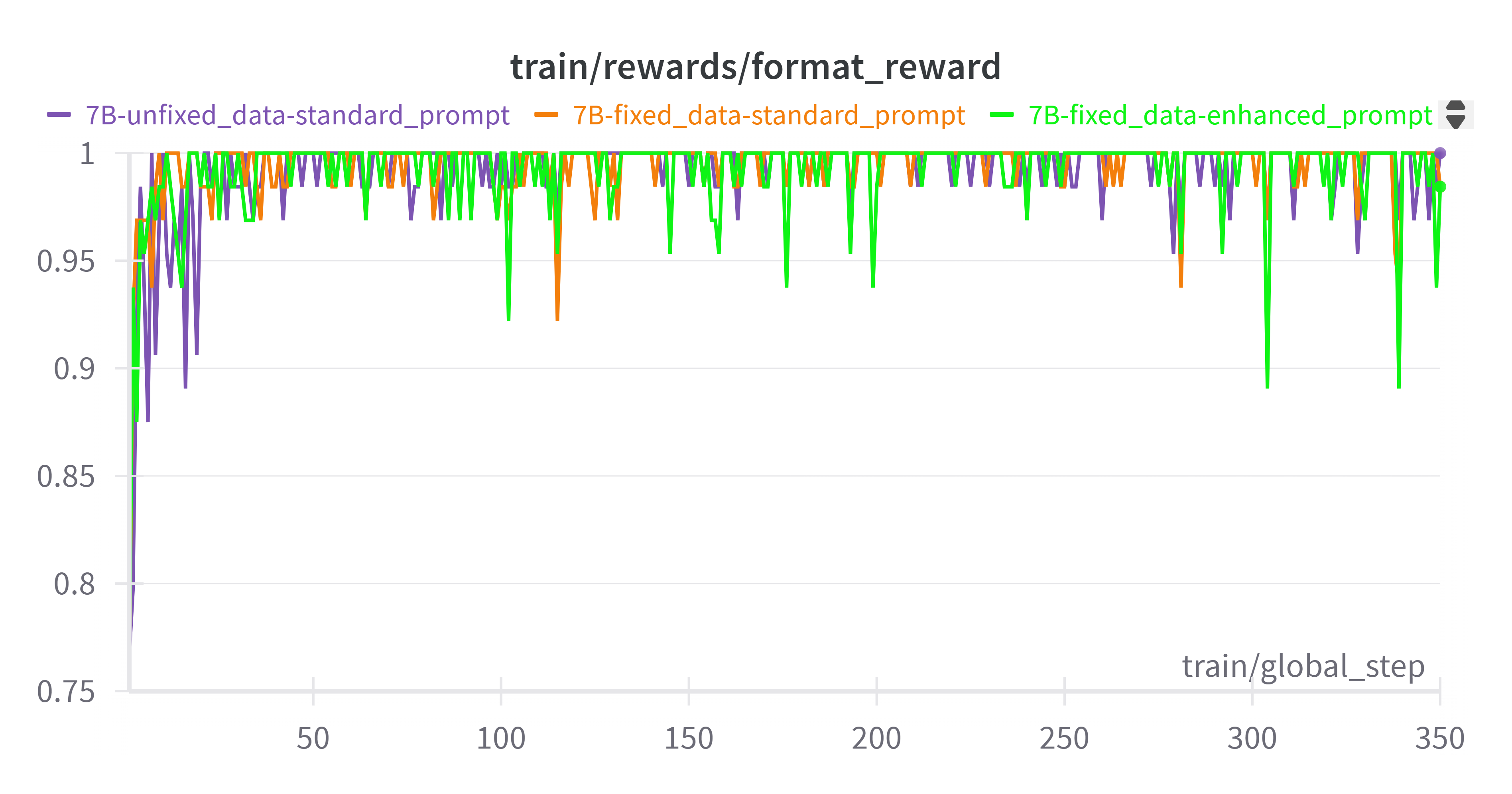

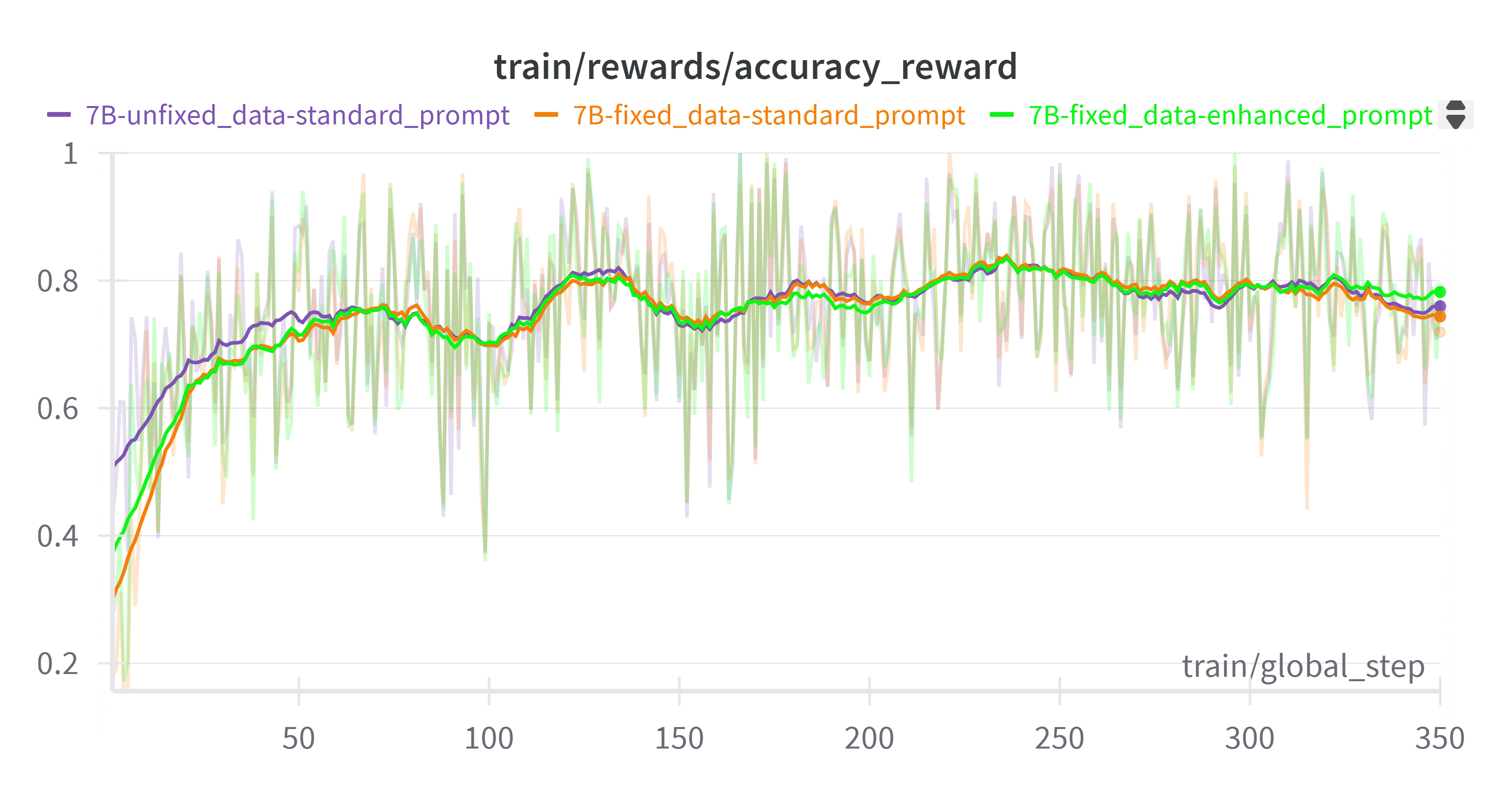

Format rewards on 7B model during training

Accuracy rewards on 7B model during training

The 7B model is more robust to the prompt which brings stronger initial format adherence

We tried the same experiment (fixed data and use enhanced prompt) on model 7B as we did on model 3B, and it turns out that the training rewards is almost the same. The accuracy reward going up along with the format reward right at the beginning. This reflects the fact that unlike the 3B model whose training performance may change significantly with minor adjustments to prompt, the 7B model exhibits relatively low sensitivity to cue modifications due to its stronger generalization ability and higher parameter capacity. This is also shown in that 7B models has stronger initial format adherence (0.75 initial format score) compared to 3B models (0.4)

5.3 System Prompt Variations

For instruct models, system prompts had minimal effect on format learning

We additionally tried training with the enhanced prompt passed in as a build-in system prompt, and found that the format rewards barely increased, which validates the point made in "MM-Eureka: Exploring Visual Aha Moment with Rule-based Large-scale Reinforcement Learning" (https://arxiv.org/abs/2503.07365): for the instruct model, which should have retained the model's built-in system prompt and included format-related information in the user prompt. In contrast, for the base model, we should provide format information in the system prompt.

| Model | COCO_pos_1 (mAP) | Precision (IoU=0.5) | Recall (IoU=0.5) |

|---|---|---|---|

| 3B base (Qwen2.5-VL-3B-Instruct) | 23.6 | 70.14 | 47.69 |

| 3B (fixed data & enhanced prompt) | 24.5 | 68.2 | 50.27 |

| 7B base (Qwen2.5-VL-7B-Instruct) | 24.6 | 71.53 | 50.56 |

| 7B (fixed data & enhanced prompt) | 24.6 | 68.1 | 55.15 |

We finally evaluated our models on COCO pos1. The 7B model did not show any improvement on mAP, while 3B model gained some improvement, so perhaps using the same data (OCDEval), the 3B model with a smaller training parameters might be able to achieve more improvement compared to the 7B with a stronger base capability.

However, all of the above findings were obtained by training on the OVDEval dataset, and perhaps its data characteristics may have some impact. In the future, we will try to continue the experiments on other datasets (e.g., D³) to verify these findings.

6. RL vs SFT Training Comparison

6.1 Basic Performance Comparison

We conducted an initial comparison between basic Reinforcement Learning (RL) and Supervised Fine-Tuning (SFT) approaches using identical training data (COCO) and base models (Qwen2.5-VL-3B). In this comparison, we utilized the MAP reward for reinforcement learning. Note that these results reflect basic RL implementation without advanced techniques like KL-divergence adjustment (KL=0) or output length control rewards that were explored later.

| Training Method | REFCOCO | REFGTA | COCO_filtered (mAP) |

|---|---|---|---|

| Base (Qwen2.5-VL-3B) | 73.73 | 71.8 | 23.7 |

| Basic RL Training | 73.87 | 67.4 | 23.5 |

| SFT Training | 83.20 | 70.4 | 25.5 |

Note: These initial results showed SFT outperforming basic RL without advanced techniques. Later experiments with optimized RL rewards showed substantial improvements over these baseline RL results.

6.2 Token Generation Analysis

| Model | Min Tokens | Max Tokens (excluding ≥3000) | Average Tokens (excluding ≥3000) | Records with Tokens ≥3000 |

|---|---|---|---|---|

| Base Model | 34 | 1892 | 139.752 | 281 |

| RL Model | 79 | 2999 | 240.563 | 534 |

| SFT Model | 48 | 2999 | 192.259 | 238 |

Key observation: SFT-trained models tend to be more concise in their outputs while maintaining better performance. RL with MAP rewards tended to generate more verbose outputs, potentially "hacking" the reward by generating more bounding boxes.

7. Other Implementation Findings

7.1 Trainer Consistency Verification

Multiple implementations were tested for consistency:

| Code Version | SuperCLEVR Test Score |

|---|---|

| Old Version [734e46] | 85 |

| New Version [a301eb] | 87 |

| VLLM Version [85d9f4] | 87 |

All implementations showed consistent performance, validating the codebase's stability.

7.2 Model Size Impact (3B vs 7B)

Performance comparison on OVDEVAL:

| Model | OVDEval Avg (mAP) | Proper Noun Avg | Attribute Avg | Position | Relationship | Negation |

|---|---|---|---|---|---|---|

| 7B Best | 45.27 | 53.33 | 25.10 | 65.4 | 26.1 | 56.4 |

| 3B Best | 43.43 | 51.87 | 24.70 | 63.1 | 26.6 | 50.9 |

7B models generally performed slightly better, though the difference was not dramatic. The 7B model had particular advantages on negation tasks.

7.3 Completion Length and Batch Size

- Increasing maximum completion length to 2048 while reducing batch size did not yield significant improvements

- The original configuration (maximum length 1024, larger batch size) performed better in terms of processing efficiency and response quality

8. Key Takeaways and Best Practices

Training Methodology

- Well-tuned RL (especially with cosine rewards) can outperform SFT

- Basic RL without careful reward engineering may underperform SFT

Data Preparation

- Prioritize single-class, single-box object detection training data

- Consider the precision-recall trade-offs when training on small objects

- Avoid pure single region label training for object detection tasks

Reward Selection

- Use AP over AP50 for more granular feedback

- weighted_sum with cosine reward provides the best performance

- Be cautious with repetition rewards as they may reduce recall

Prompt Engineering

- Even minor grammatical issues can impact training convergence

- Well-structured prompts can significantly accelerate training

- Keep structured thinking processes in prompts

- 7B models are less sensitive to prompt formatting than 3B models

Implementation

- Code implementations are stable across versions

- VLLM can be safely used as an alternative implementation for faster generation

Future Research Directions

- Investigate optimal mixing ratios for multi-task training

- Develop improved reward functions for RL training

- Research methods to better handle small object detection without precision loss

We continue to explore new approaches and welcome community feedback and contributions.